Workshop: Building an AI Voice Agent to Automate a Robot Cafe with Gemini Live and Angular

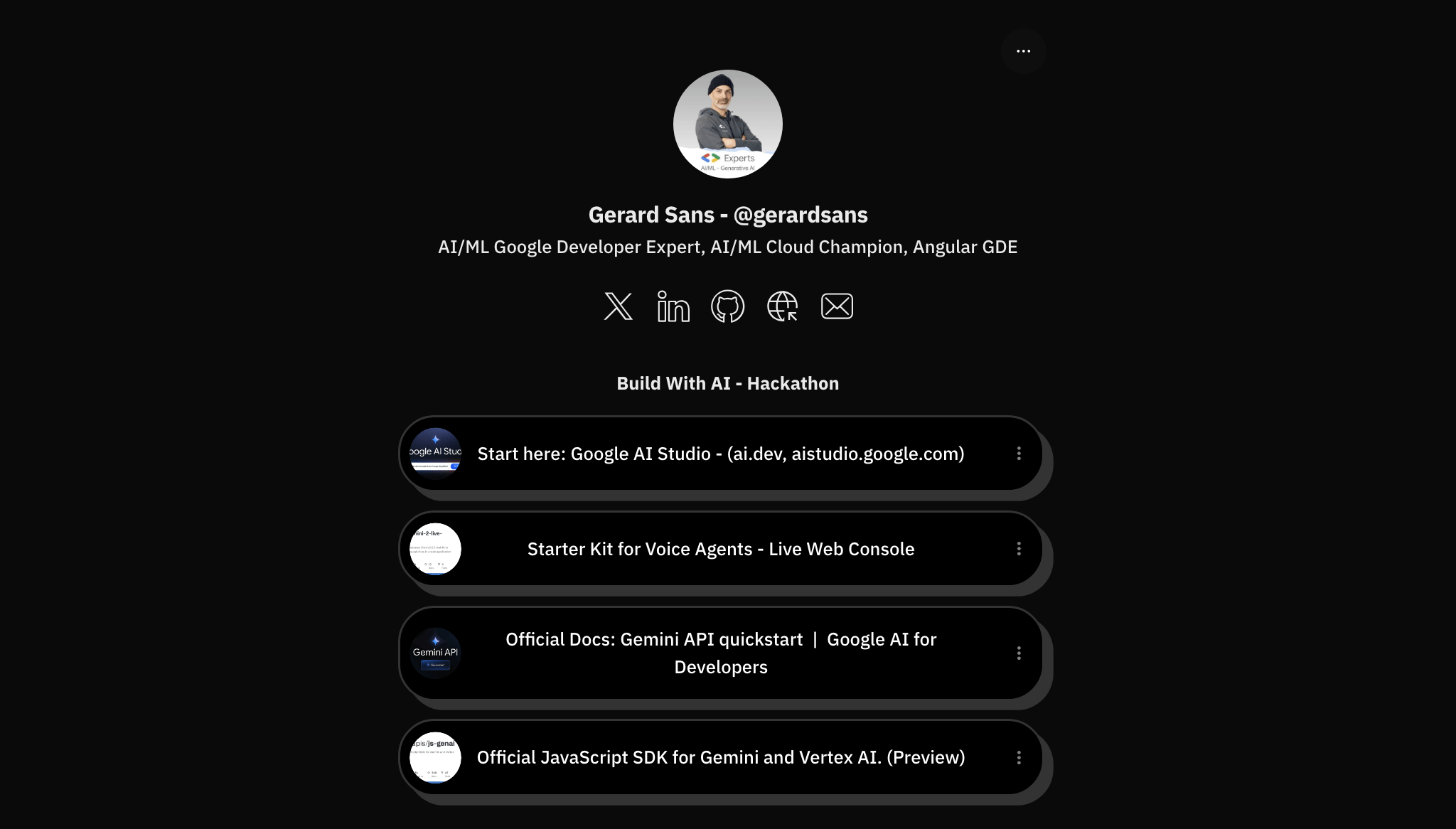

By Gerard Sans

Workshop: Building an AI Voice Agent to Automate a Robot Cafe with Gemini Live and Angular

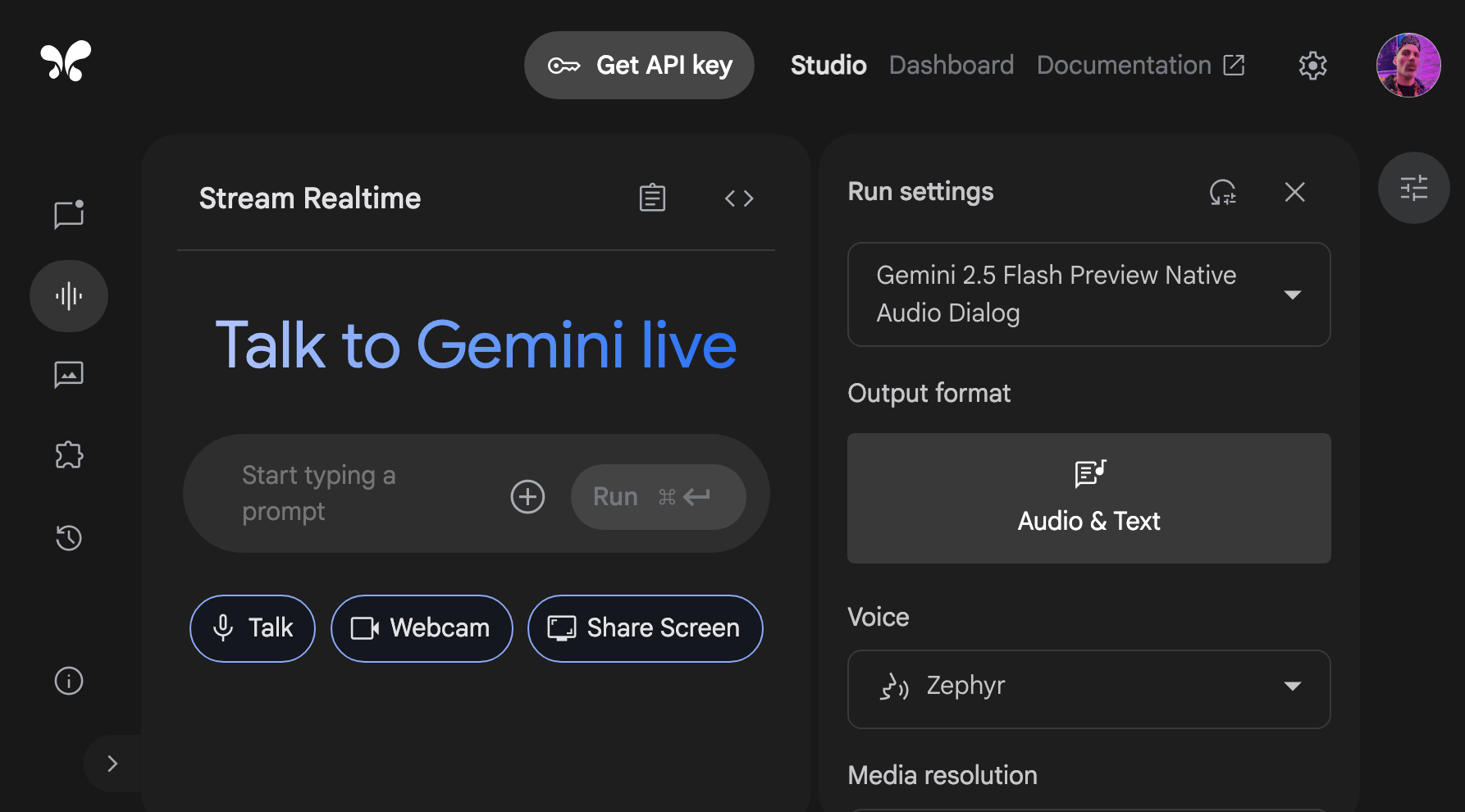

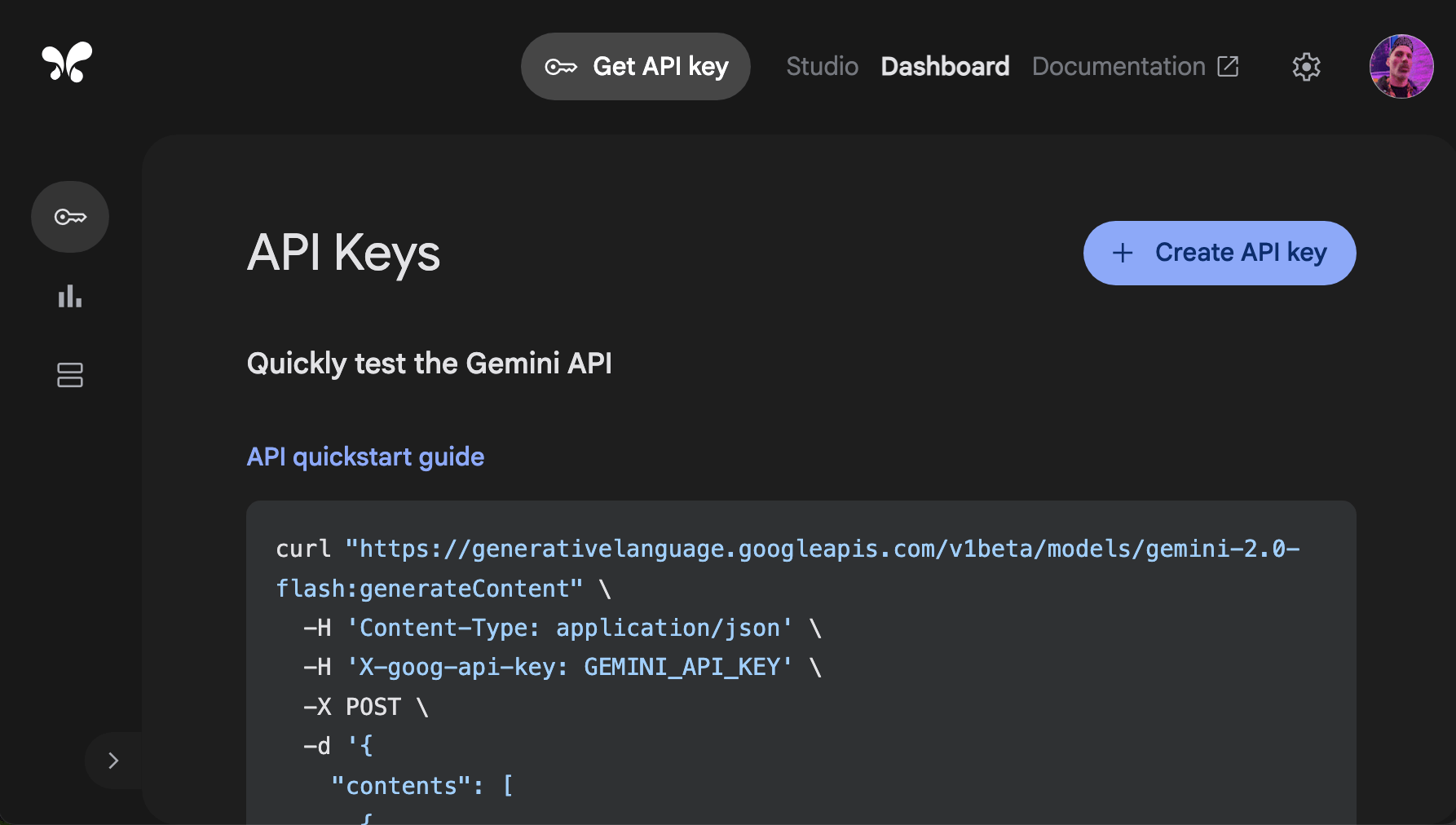

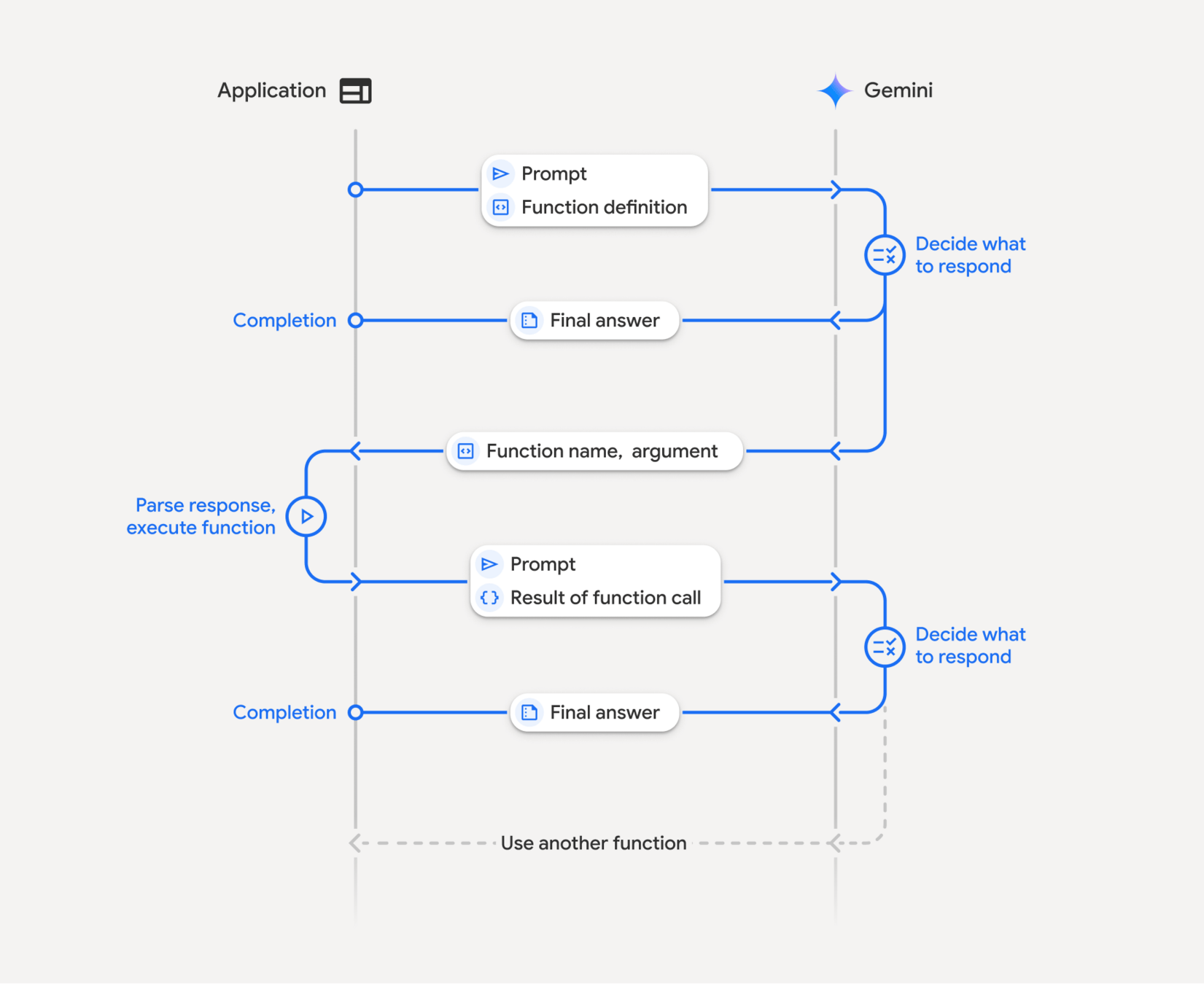

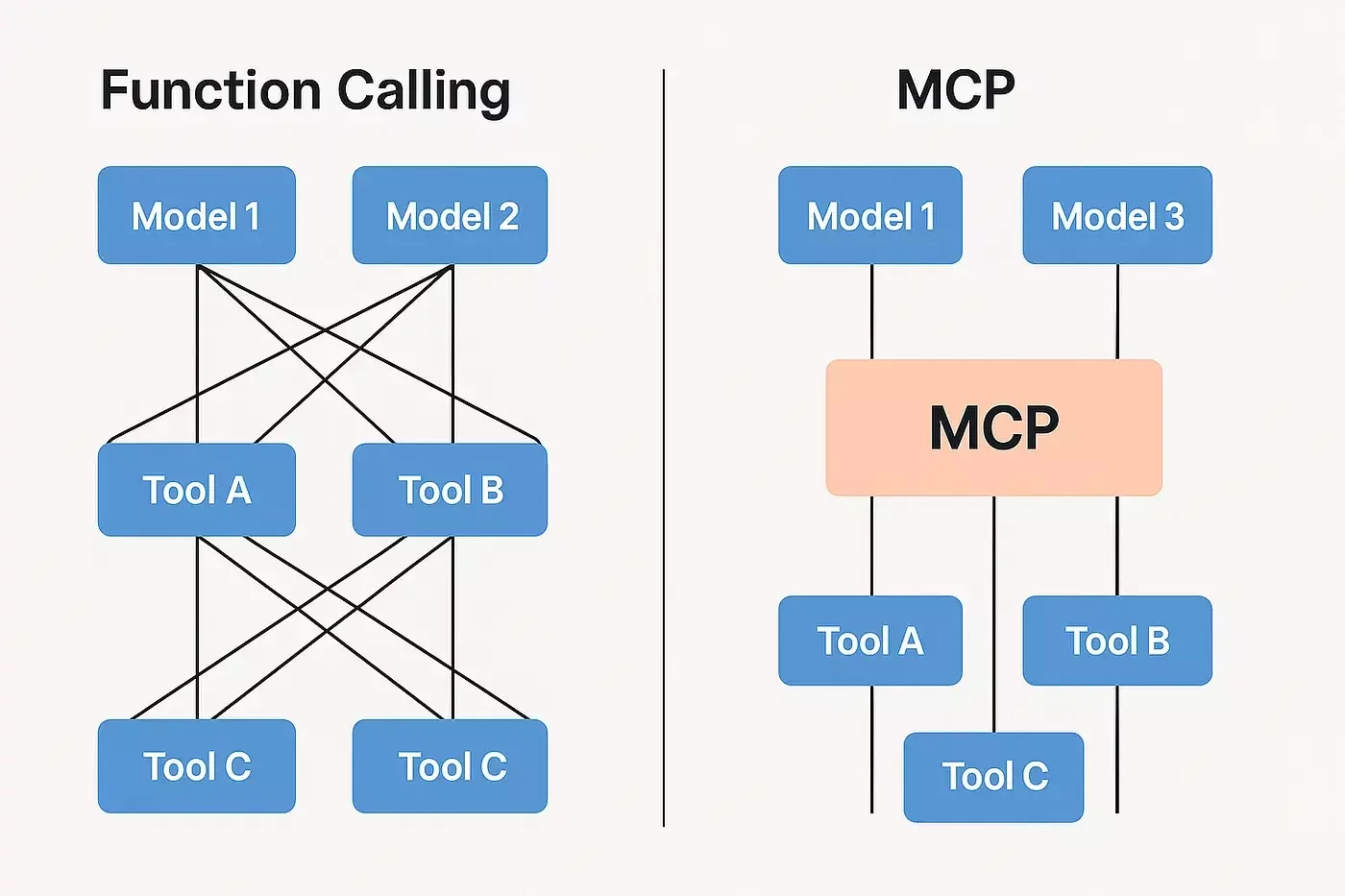

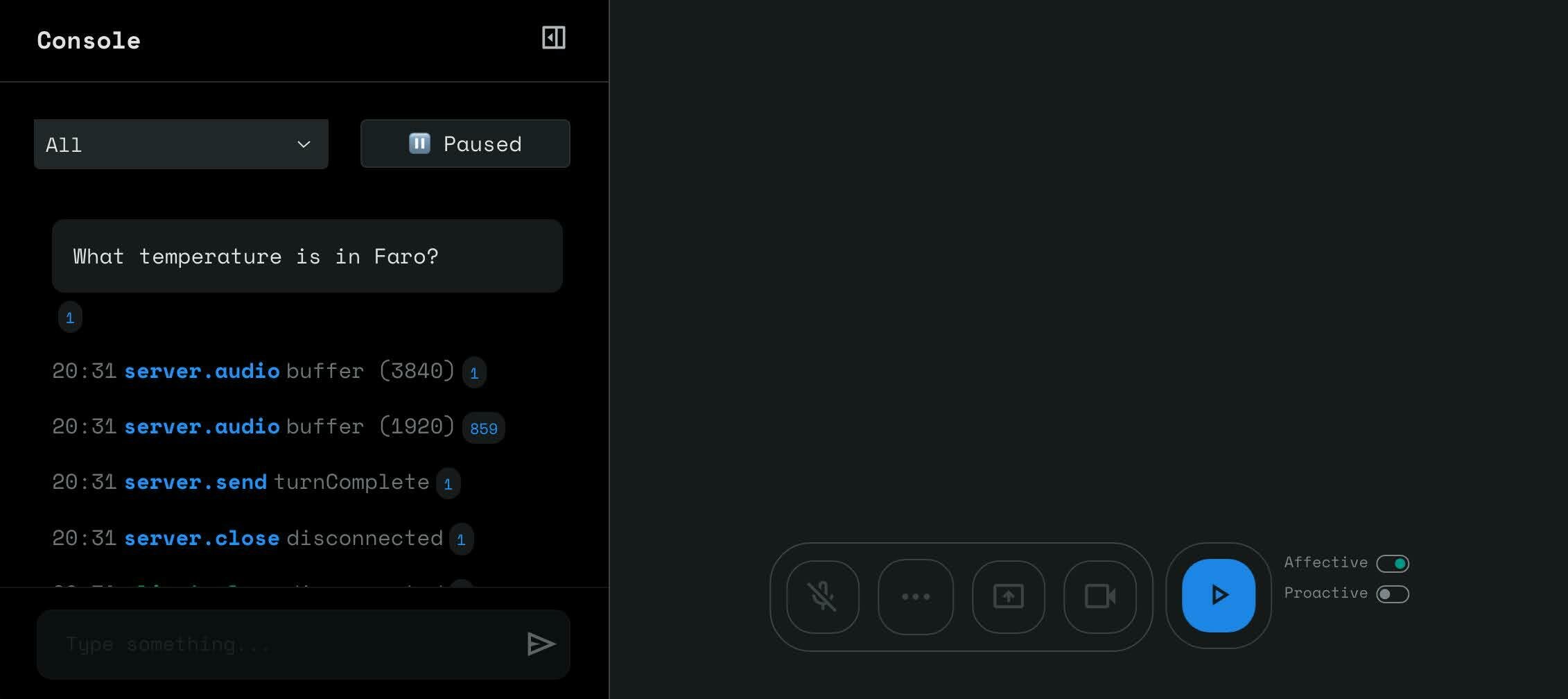

The launch of Alexa+ has sparked renewed excitement around the next generation of AI voice assistants powered by generative AI. With Gemini 2.0 and the new Gemini Live API, developers now have the tools to build voice-driven AI agents that seamlessly integrate into web applications, backend services, and third-party APIs. In this workshop we will go beyond simple chatbot interactions to explore how AI agents can power real-world automation—in this case, running an entire robot cafe. We’ll walk through building a voice-first assistant capable of executing complex workflows, streaming real-time audio, querying databases, and interacting with external services. This marks a shift from "ask and respond" to a more dynamic "talk, show, and act" experience. You might assume taking a coffee order is straightforward, but even a basic interaction involves more than 15 distinct states. These include greeting the customer, handling the order flow, confirming selections, applying offer codes, managing exceptions, and supporting cancellations or changes. Behind the scenes, the AI agent coordinates with multiple systems to fetch menu data, validate inputs, and trigger robotic actions. On the frontend, we’ll build an Angular client from scratch that handles real-time audio input and output. You’ll learn how to stream microphone data, integrate with Gemini voice responses, and use the GenAI SDK to connect everything together. We’ll also cover how to tap into core Gemini capabilities like code execution, grounding search, and function calling—enabling a true AI agent that can manage workflows, make decisions, and take action in real time. Instead of a traditional chat UI, this project creates a fully voice-automated, hands-free experience where the assistant doesn’t just chat—it runs the operation. Join us for a deep dive into the future of AI automation — where natural voice is the interface, and the AI agent takes care of the rest, including your fancy choice of coffee!